Remapping Movement of Shoulder with Soft Sensors: A Non-invasive Wearable Body-Machine Interface

IEEE Sesnors Journal, 2024

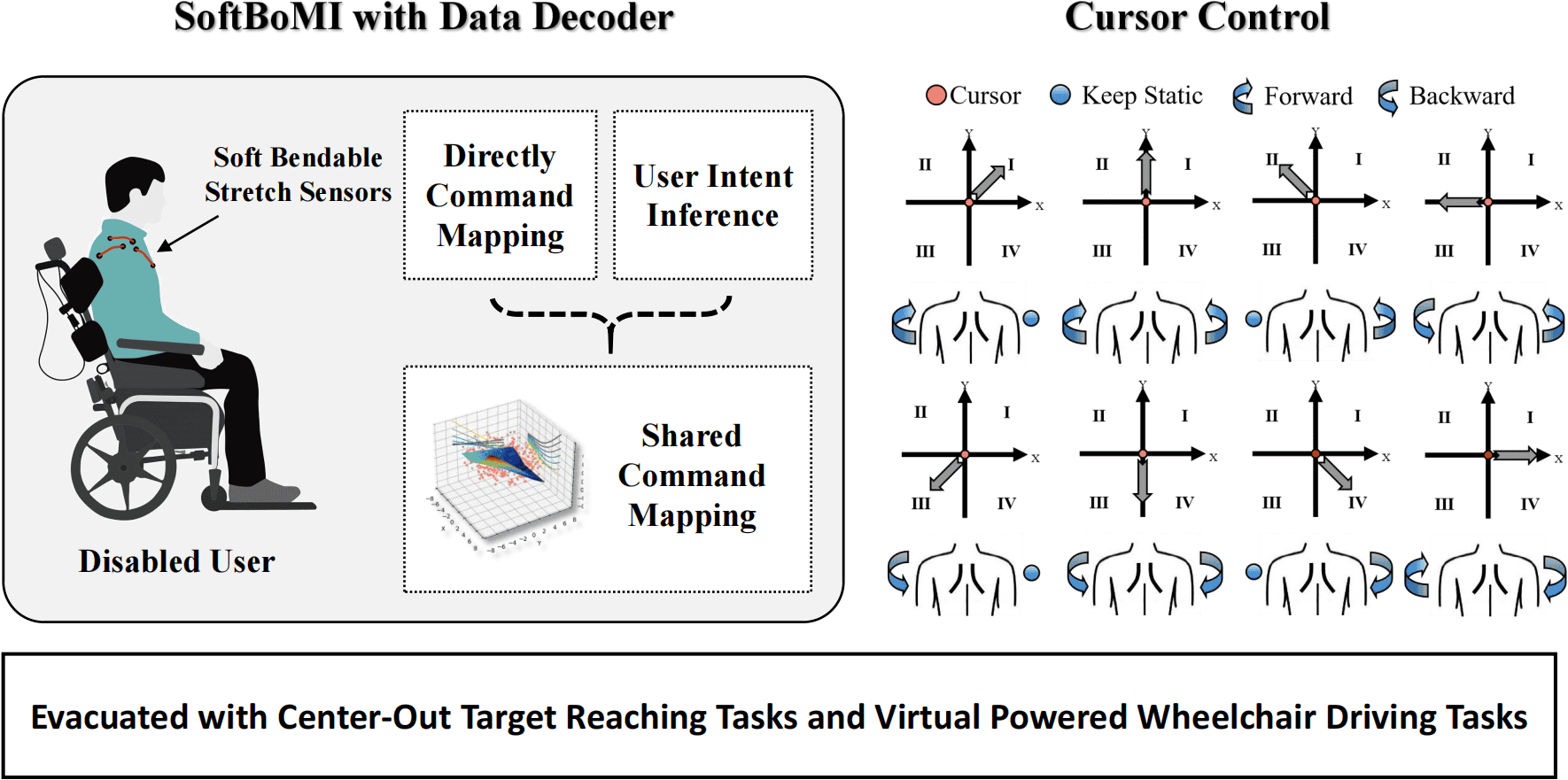

Soft sensors are typically composed of flexible materials capable of bending and stretching, thereby accommodating various human movements. This study presents a human-machine interface utilizing silicone-based soft sensors and inertial measurement units (IMUs) to convert shoulder movements of amputees and quadriplegics into continuous two-dimensional commands for controlling assistive devices. We have developed a rule-based data decoding method and a intent-inference-based data decoding method to generate real-time commands. Furthermore, by integrating the user's manipulation performance prior knowledge into a shared autonomy framework, we have implemented a shared command mapping approach to improve overall command generation performance. To validate the proposed interface prototype, we conducted center-out target reaching tasks and a virtual wheelchair driving task involving nine healthy subjects. The experimental results indicate that using soft sensors can effectively capture human shoulder movements, thereby generating reliable interaction commands.

DOI: 10.1109/JSEN.2024.3440325

Link:https://ieeexplore.ieee.org/document/10654754/references#references