Journal of Neural Engineering, 2024

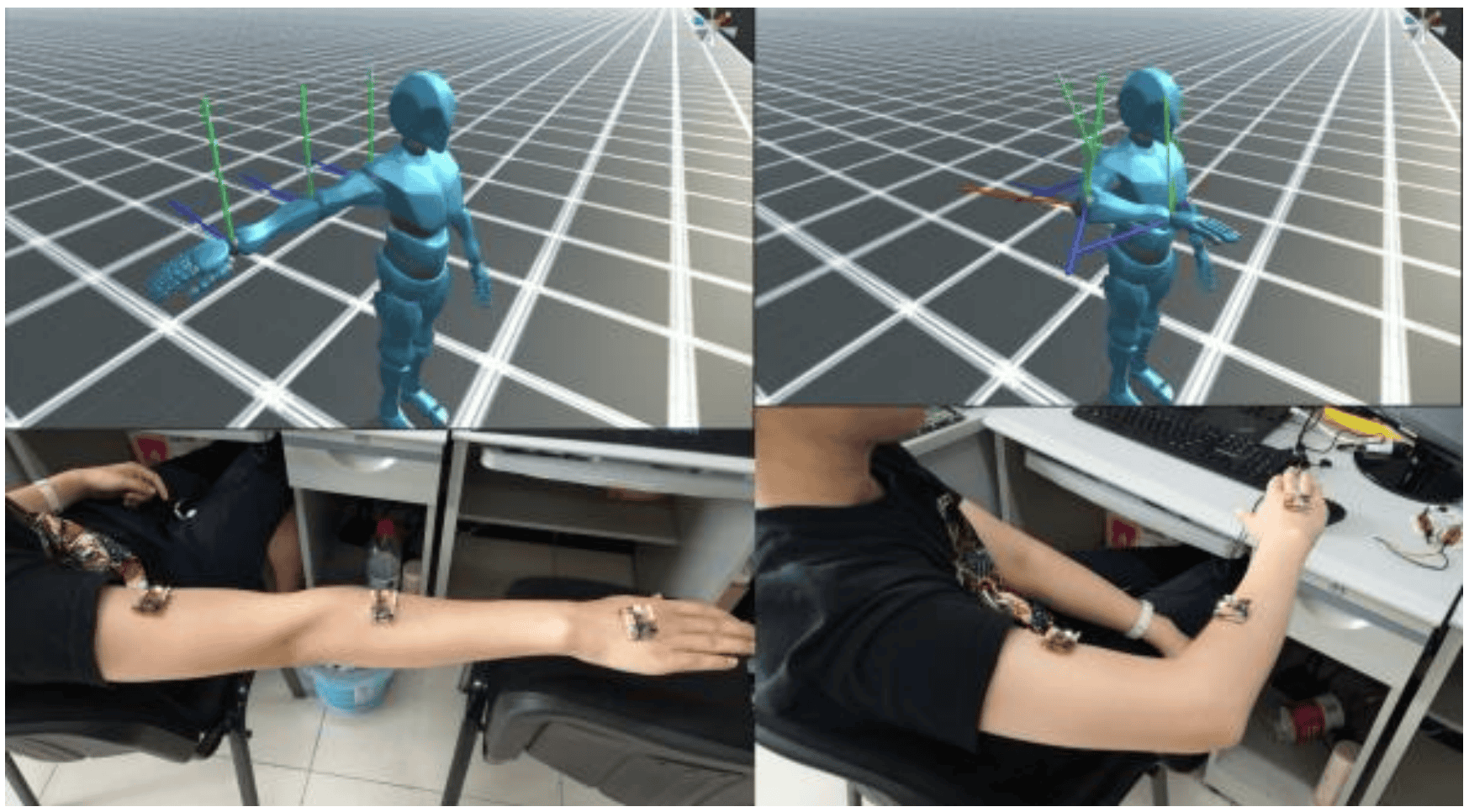

Objective: Customized human-machine interfaces for controlling assistive devices are vital in improving the self-help ability of upper limb amputees and tetraplegic patients. Given that most of them possess residual shoulder mobility, using it to generate commands to operate assistive devices can serve as a complementary approach to brain-computer interfaces.

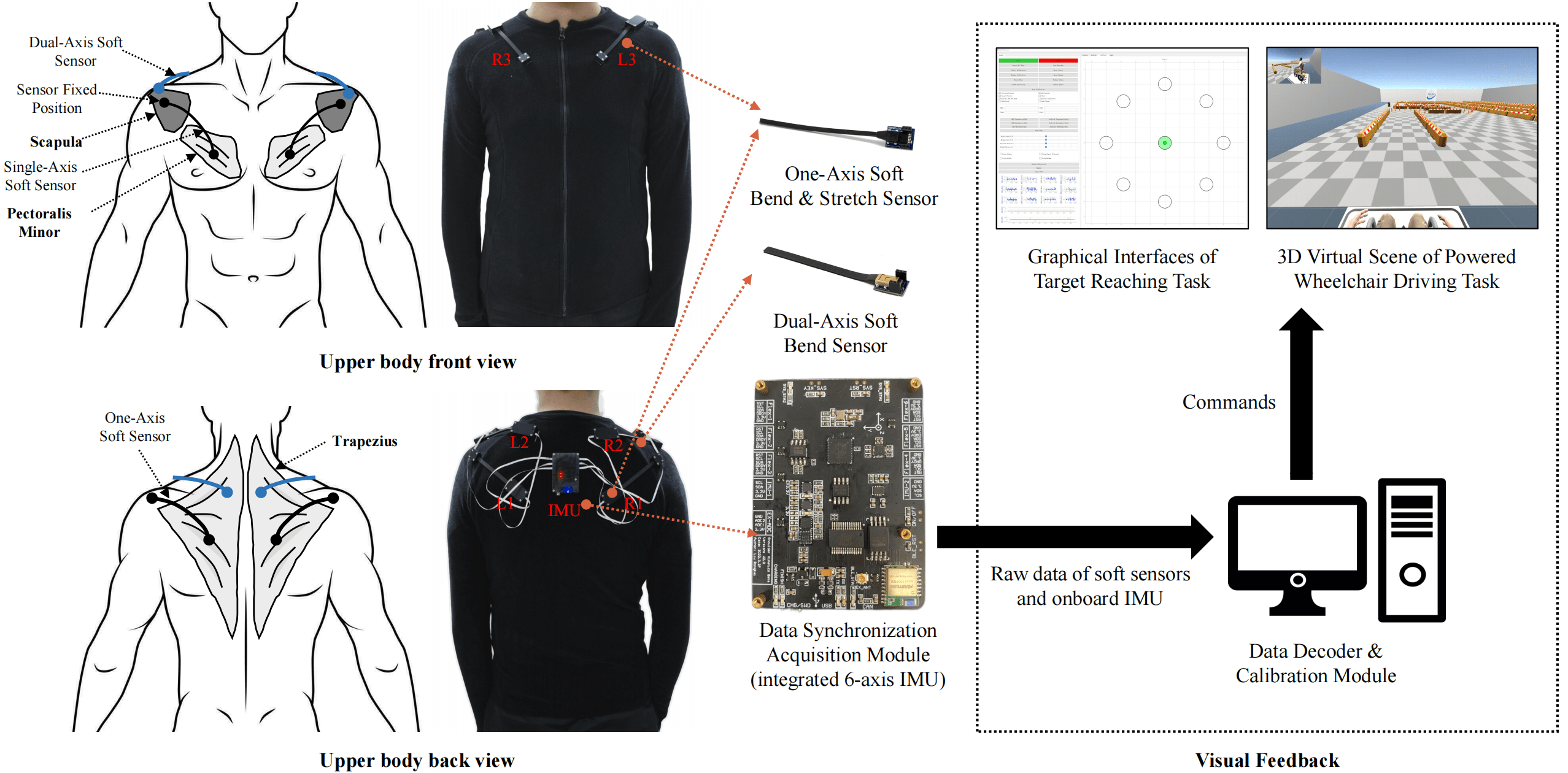

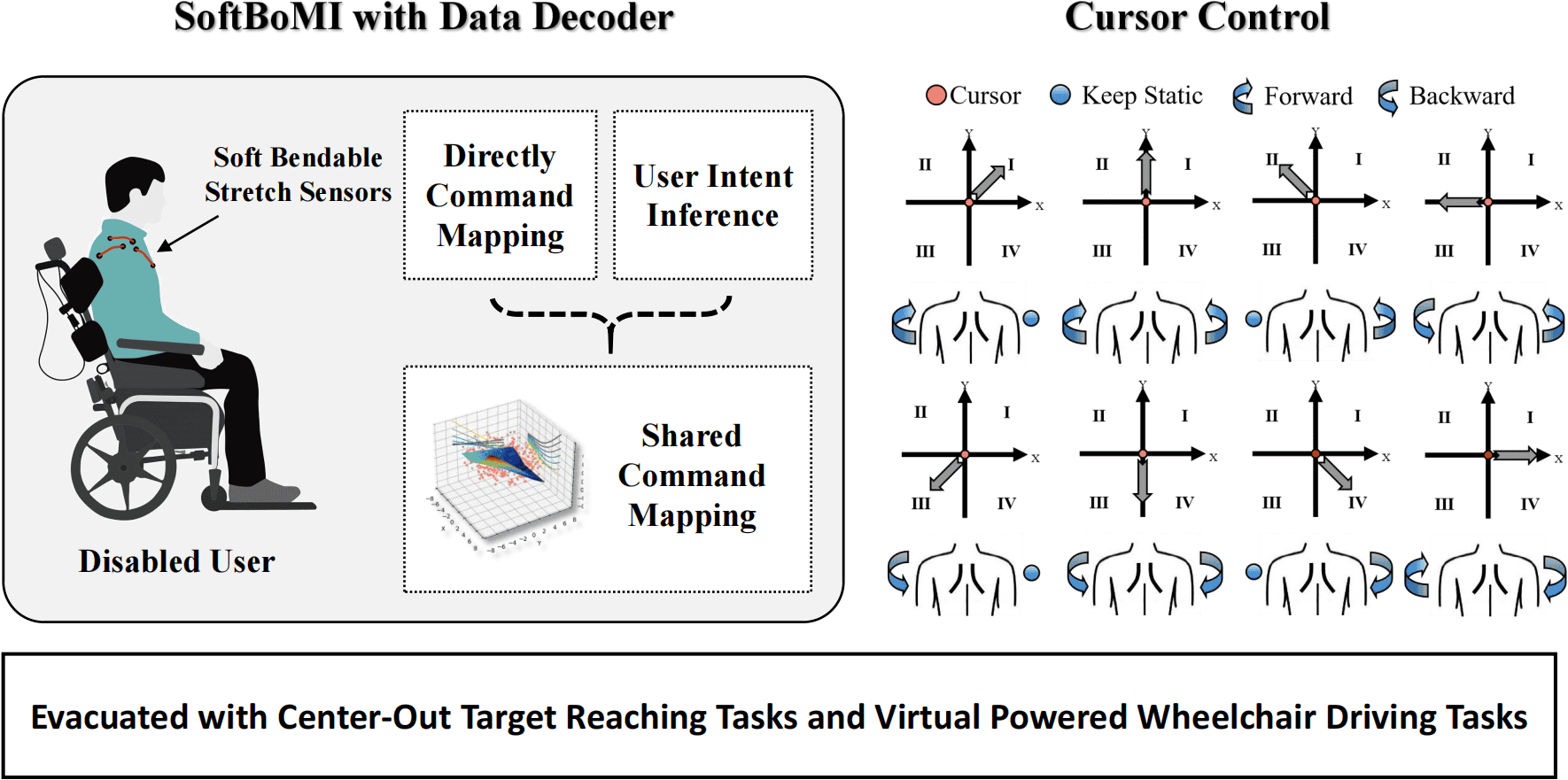

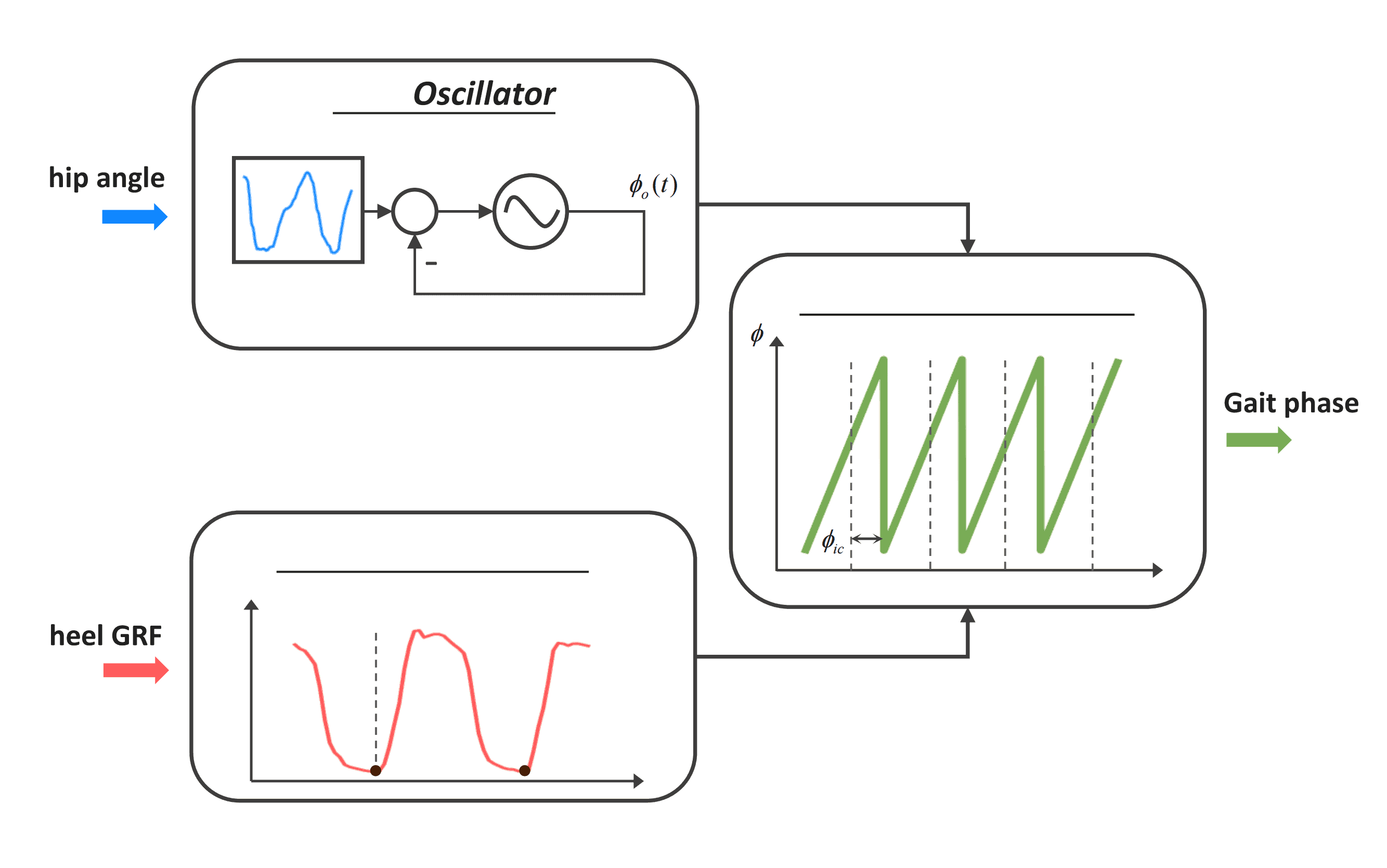

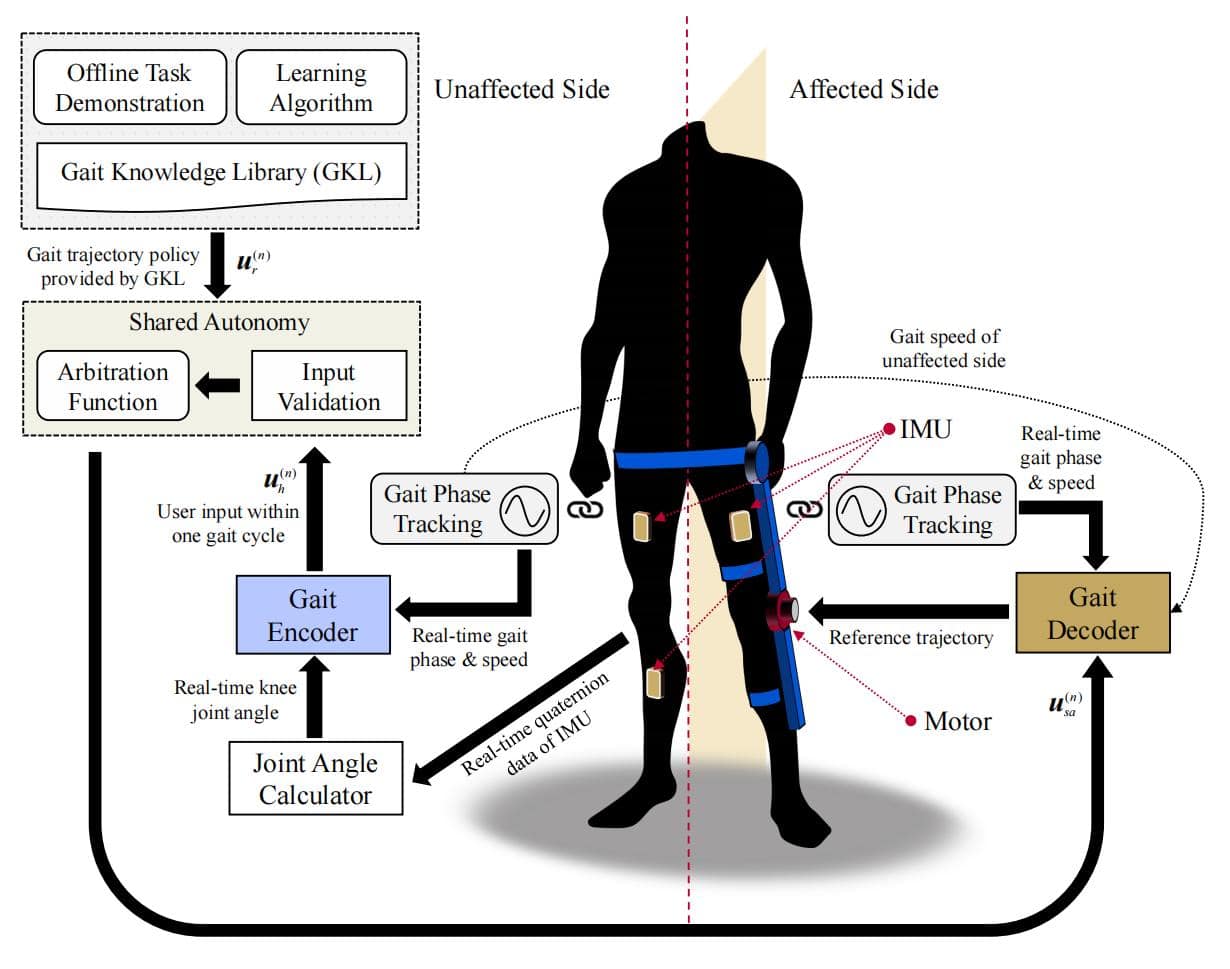

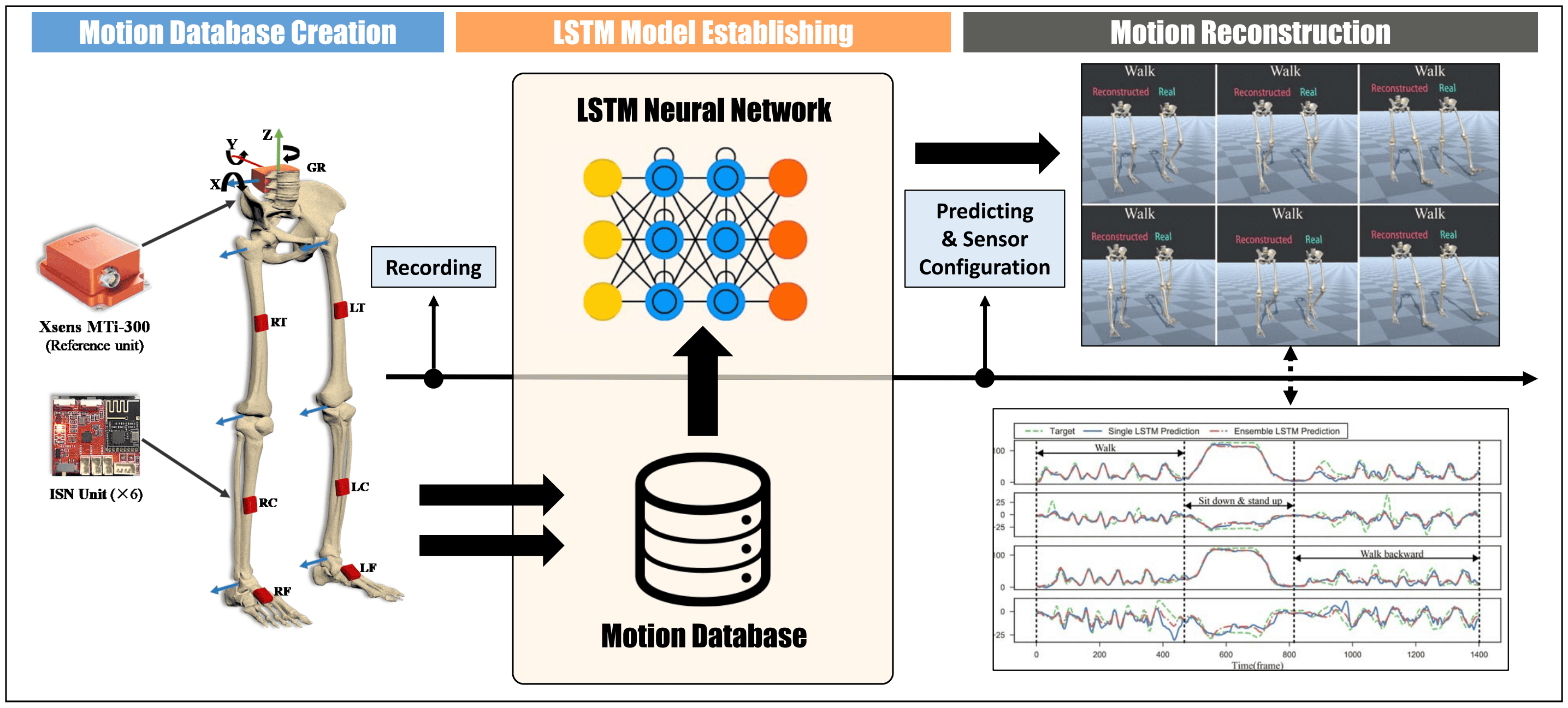

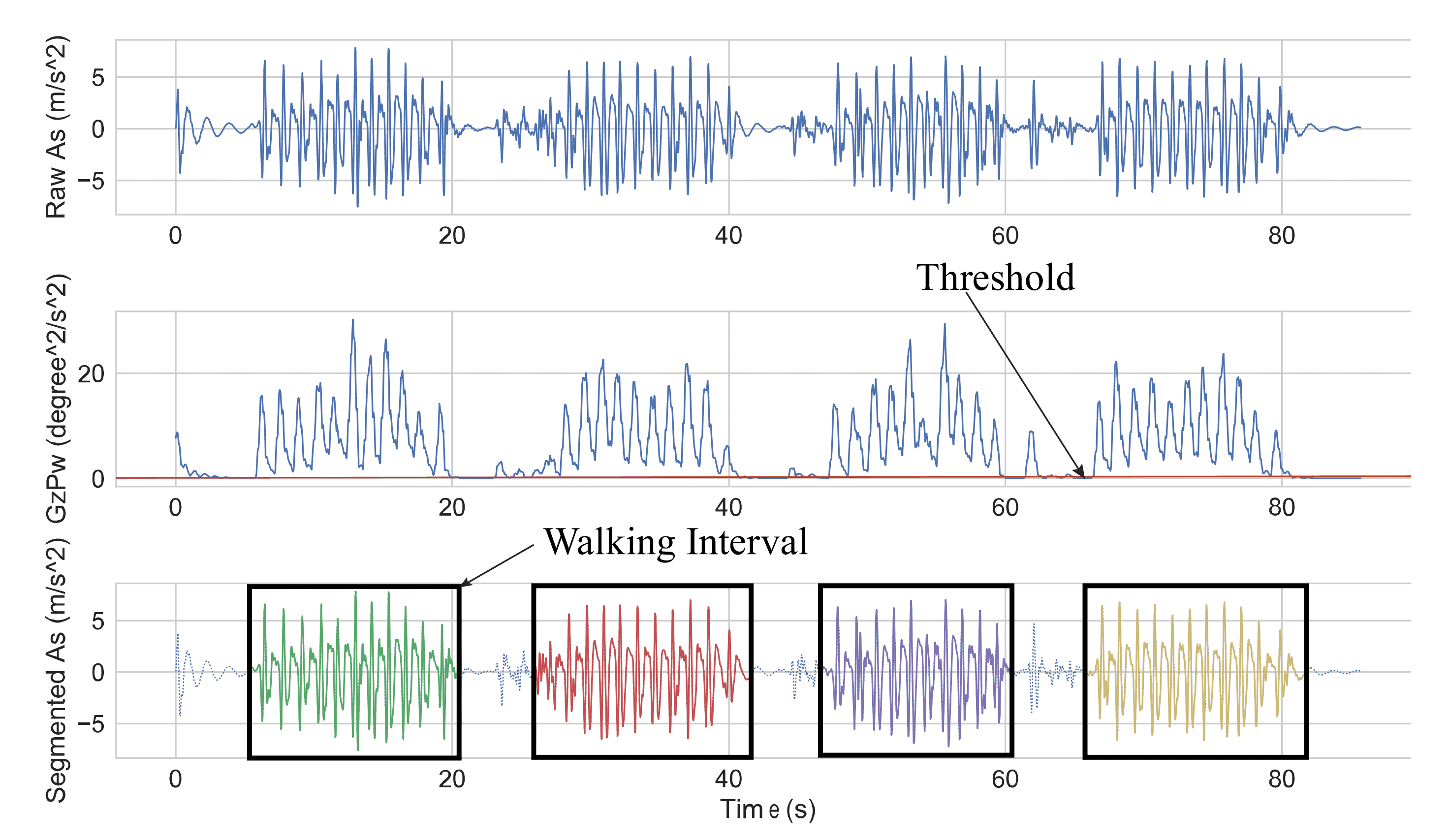

Approach: We propose a hybrid body-machine interface prototype that integrates soft sensors and an inertial measurement unit. This study introduces both a rule-based data decoding method and a user intent inference-based decoding method to map human shoulder movements into continuous commands. Additionally, by incorporating prior knowledge of the user's operational performance into a shared autonomy framework, we implement an adaptive switching command mapping approach. This approach enables seamless transitions between the two decoding methods, enhancing their adaptability across different tasks.

Main results: The proposed method has been validated on individuals with cervical spinal cord injury, bilateral arm amputation, and healthy subjects through a series of center-out target reaching tasks and a virtual powered wheelchair driving task. The experimental results show that using both the soft sensors and the gyroscope exhibits the most well-rounded performance in intent inference. Additionally, the rule-based method demonstrates better dynamic performance for wheelchair operation, while the intent inference method is more accurate but has higher latency. Adaptive switching decoding methods offer the best adaptability by seamlessly transitioning between decoding methods for different tasks. Furthermore, we discussed the differences and characteristics among the various types of participants in the experiment

Significance: The proposed method has the potential to be integrated into clothing, enabling non-invasive interaction with assistive devices in daily life, and could serve as a tool for rehabilitation assessment in the future.